How, where, and why IBM PureApplication fits in your cloud

Introduction

Cloud computing delivers a set of technology capabilities and business functions as services on demand over the Internet or on a private network. The “cloud” is the infrastructure that the services run on, generally comprised of hypervisors, virtual machines, containers, storage devices, and networking devices – as well as the workload management technologies that make it possible to effectively deliver those services.

Using technologies such as middleware and web-based software as services saves money, expedites development and deployment time, and reduces resource overhead. Users access only what they need for a particular task and benefit from the flexibility, economies of scale, and optimized management of resources.

IBM® PureApplication® systems introduce out-of-the-box capabilities that improve the way we create and deliver cloud solutions by simplifying the creation and reuse of applications and topologies. You get infrastructure patterns of expertise from IBM and its partners, as well as a platform that is optimized for enterprise applications. PureApplication systems also integrate well with Docker containers, Chef Recipes, IBM Bluemix™, and OpenStack.

PureApplication product family

The PureApplication product family has three members:

- IBM PureApplication System

- IBM PureApplication Software

- IBM PureApplication Service

A PureApplication System is a cloud-in-a-box configuration. It is a fully integrated system that includes hardware, software, hypervisors, network devices, all converged in a single physical box, and managed through a unified console. The PureApplication System box comes in multiple configurations, supporting either x86 or POWER based cores.

PureApplication Software represents a software version of the PureApplication deployment engine and management console. This member of the family requires you to bring-your-own-hardware (BYOH). Instead of buying a “cloud in a box,” you can leverage existing infrastructure. This involves installing the software on a virtual machine that runs on a VMware VSphere Hypervisor, and then configuring it to work with one or more existing hypervisors, including the same hypervisor that hosts the PureApplication software itself.

Whereas a PureApplication System can support Red Hat Linux®, Windows®, or AIX®, depending on whether the machine is x86 or POWER® based, PureApplication Software currently supports only RHEL 5.x, RHEL 6.x (32-bit and 64-bit), Windows 2008 R2 (64-bit), Windows 2012 (64-bit), and Windows 2012 R2 (64-bit).

PureApplication Service on SoftLayer® is based on the same idea as PureApplication Software, but leverages SoftLayer’s worldwide data centers to provide an off-premise option. This service provides dedicated hardware configured as PureApplication Software on the IBM SoftLayer cloud. PureApplication Service is also based on x86 architecture and VMware virtualization technology.

Patterns developed with one member of the PureApplication family can be shared with other members with little or no change. For example, a pattern created on a PureApplication System on premise can be deployed onto a SoftLayer cloud environment (off-premise) using the PureApplication Service on SoftLayer.

Cloud service delivery models

Cloud offers three service delivery models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). These models determine the levels of sharing and possible multi-tenancy that a cloud provider can offer its users. As Figure 1 illustrates, at each level in the stack, tenants share components that are part of that delivery model.

Infrastructure as a Service

At the lowest layer is IaaS, where tenants share infrastructure resources such as processors, network, and storage, as well as the operating system. Tenants install their own middleware components and applications, which gives them more flexibility, but also makes configuration and maintenance more difficult. In other words, IaaS provides users with shared computing capacity, network-accessible storage, and an OS. Everything else tenants install themselves and do not share.

Platform as a Service

PaaS is a layer above IaaS and provides middleware components, databases, storage, connectivity, reliability, caching, monitoring and routing. PaaS builds upon IaaS to deliver progressively more business value. Tenants continue to use their individual applications, but can leverage PureApplication’s patterns of expertise for transaction-oriented web and database applications, as well as shared middleware services such as monitoring, security, and databases. PureApplication systems play a key role in this space, providing a pre-configured, open platform for PaaS solutions.

Software as a Service

With SaaS, tenants share everything that they would in an IaaS and PaaS solution, plus an application. In this case, all tenants share the same application, but maintain their data isolated. With SaaS, it is easier to add new tenants because the client is simply selecting and customizing a cloud application without worrying about building the middleware or installing the application. There is little left to do for the client.

Cloud deployment models

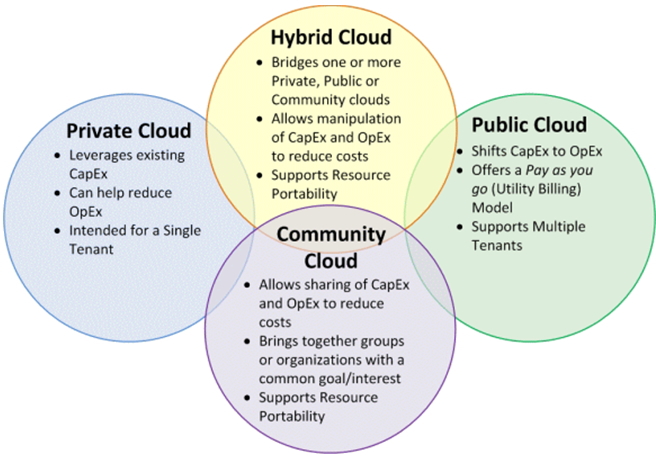

As Figure 2 shows, cloud computing has four deployment models.

Figure 2. Cloud deployment models

Public cloud

A public cloud is open to the general public. The cloud infrastructure exists on the premises of the cloud provider, and may be owned, managed, and operated by one or more entities. One of the main reasons companies move to a public cloud is to replace their capital expenses (CAPEX) with operating expenses (OPEX). A public cloud uses a “pay as you go” pricing model, which means the consumer does not need to buy the necessary hardware to cover peak usage up front, and does not have to worry about correctly “forecasting” capacity requirements. This “pay as you go” pricing model, often referred to as utility computing, enables consumers to use compute resources as they would a utility service. They pay only for what they use, and get the impression of unlimited capacity, available on demand. In this deployment model, consumers do not normally care about where or on what hardware the processing is done. They trust the cloud provider will maintain the necessary infrastructure to run their applications and provide the requested service at their required Service Level Agreement (SLA).

Private cloud

A private cloud is deployed for the exclusive use of an organization. The organization or a third party can own, manage, and host it, and its infrastructure can exist on or off premises. When a third party manages the cloud, it is called a managed private cloud. When the private cloud is hosted and operated off premises, it is called a hosted private cloud. IBM SoftLayer, for example, hosts a public cloud, but also provides private cloud services, where consumers can build, manage, and have total control of the virtual servers they use.

Here are some of the reasons why companies adopt private cloud solutions:

- Leverage existing hardware investments. If there is already a significant investment in hardware on-premise, many companies will prefer to just consolidate their IT resources, and use cloud technologies to provide automation, self-service, and better integrated management tools to reduce their total operating costs.

- Data security and trust. Many clients have concerns over data security and issues of trust with multiple client organizations sharing the same resources. Because of this, clients often start their cloud venture behind an enterprise firewall or in completely isolated environments.

- Resource contention. In public cloud environments, resources are shared among multiple customers. A company might prefer exclusive use of hardware, such as servers and load balancers to handle specific workloads or to obtain higher availability of systems and applications at specific times.

A fair question to ask is what an on-premise private cloud has to offer an organization that already has a highly virtualized environment with scripts already written to provision new applications. The answer is that a private cloud does not just make the provisioning easier, but also provides a way to offer cloud-based services to your internal organization.

With PureApplication systems, you get dynamic resource scaling, self-service, a highly standardized infrastructure, a workload catalog with ready to run workloads, approvals, metering, and integrated management through a single console. PureApplication systems also give you the ability to leverage a standard catalog of virtual machines or virtual appliances that can be provisioned at any time, and expanded on demand to more quickly respond to changing business needs. Virtualization alone does not give you these things.

Hybrid cloud

A hybrid cloud consists of two or more different cloud infrastructures that remain distinct but share technologies that enable porting of data and applications from one to the other. Hybrid cloud solutions provide interoperability of workloads that can be managed across multiple cloud environments. This includes access to third party resources and to a client partner network. The idea is to seamlessly link on-premise applications — whether home-grown, packaged, or running on a private cloud — with off-premise clouds. This is an area where PureApplication systems excel since you can create and deploy patterns on-premise with a PureApplication System or PureApplication Software, and also deploy those patterns off-premise onto SoftLayer infrastructure using PureApplication Service on SoftLayer.

For many enterprises, hybrid and multi-cloud implementations is the end goal, as it offers the most savings and flexibility. Here are some scenarios that might explain why:

- An organization might host its normal workload in a private cloud, and use a public cloud to handle its heavier traffic.

- An organization might use a public cloud to host an application, and place the underlying database in a more secure private cloud.

- A company might use a private cloud to host some of its workload, and a public cloud for specific uses (for example, backup and archiving).

- A team might decide to split the location of an application based on its life cycle stage. For example, it might choose to do development in house and then go-live in a cloud environment

Community cloud

A community cloud is for the exclusive use of a community, which is a group of people from different organizations that share a common interest or mission. This type of cloud can be owned, managed, and hosted by one or more members of the community, a third party, or a combination of both, and can exist on the premises of one of the parties involved, or off premise for everyone. Vertical markets and academic institutions in particular can benefit from community clouds to address common concerns. For example, technology companies working together on a new specification can use a community cloud to share resources, proofs-of-concepts, and internal incubation projects.

Essential characteristics of a cloud environment

The National Institute of Standards and Technology (NIST), which was founded in 1901 to promote U.S. innovation through measurement science, standards, and technologies, identifies five essential characteristics of any cloud environment:

- Broad network accessThis says that the capabilities of a cloud should be available over a broad network and accessible via standard devices such as workstations, laptops, tablets, and mobile phones.

- On demand self-serviceThe environment should support a “do-it-yourself” model where consumers can provision resources in an automated fashion through a web browser or via an application programming interface (API), without requiring human interaction with the service provider.Besides a UI that provides workload and administration consoles for creating, deploying, and managing pattern-based cloud solutions, PureApplication Systems offer a REST API and a Command Line Interface (CLI) that enable service providers to create their own “do it yourself” interfaces for consumers.

- Resource poolingThe system should pool resources so that they can easily be shared between multiple consumers. Shared resource pools enable the system to assign or reassign resources as needed based on demand. This is especially useful to support multi-tenancy models where multiple organizations or tenants share the same resources in a cloud environment.Maximizing the use of hardware resources is also particularly important when multiple VMs share them. Resource pooling treats a collection of hardware resources – compute, memory, storage, and networking bandwidth — as a single “pool” of resources available on demand. This enables hypervisors and higher level programs to dynamically assign and reassign resources based on demand and priority levels. Resource pooling is what enables multiple organizations or tenants to effectively share resources in a cloud environment.

- Rapid elasticityThe environment should be able to automatically (or at least quickly) add or remove compute resources based on workload demand without interrupting the running system.The term workload generally refers to the processing demand placed on a computer resource at a given time. Within PureApplication, it also means the deployed form of a virtual application (application-centric), virtual system (middleware-centric), or virtual appliance (machine-centric). Within the context of cloud, phrases like, “provisioning a workload” or “deploying a workload” refer to enabling that virtualized application with everything else needed to run it, including the VM, the OS, and supplemental files. When wondering how well a specified server can handle a workload, it means how well it can handle the compute, memory, disk, and networking resource demands of that deployed virtual system, virtual application, or virtual appliance.Not all workloads are the same. The resources needed for an I/O intensive workload, for example, will be different from those needed for a compute- or memory-intensive workload. Not all workloads require the same Quality of Service (QoS) levels either. Figuring out which workloads will run best under different environments can be a very effective way of reducing costs. This is where hybrid clouds and, in particular, the PureApplication family of products play an important role.

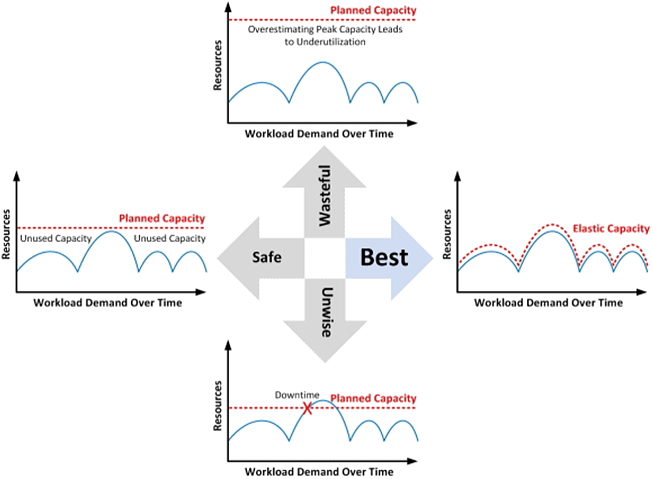

Capacity planning for particular workloads can be challenging. As Figure 3 shows, the usual pain points are known: not planning for enough capacity to meet workload demands is unwise and will lead to downtime. Conversely, overestimating capacity requirements will result in one or more servers being underutilized or sitting idle. Even correctly allocating capacity for peak usage is not enough because workload demands fluctuate, and capacity will be wasted when the system is not running at peak level. The end of a test cycle, for example, might be followed by significantly lower utilization of the hardware. Even with the best capacity planning in place, there will be cases where the workload demand is simply unpredictable. The ideal situation is to allocate only the capacity required at any given time. This is called elasticity, and it is an important characteristic of any cloud environment.

Figure 3. Elasticity

- Measured serviceFinally, a cloud environment must be able to meter and rate the resources that are being used – and by whom – to better manage workloads and optimize their execution, but also to provide a transparent view to the consumer of resource utilization.

It’s all about virtual patterns

At the heart of every PureApplication system is what we informally call the “pattern engine.” The pattern engine is found in all three PureApplication product family members, as well as in other IBM cloud offerings such as IBM SmartCloud Orchestrator.

The pattern engine enables us to use the following deployment methods:

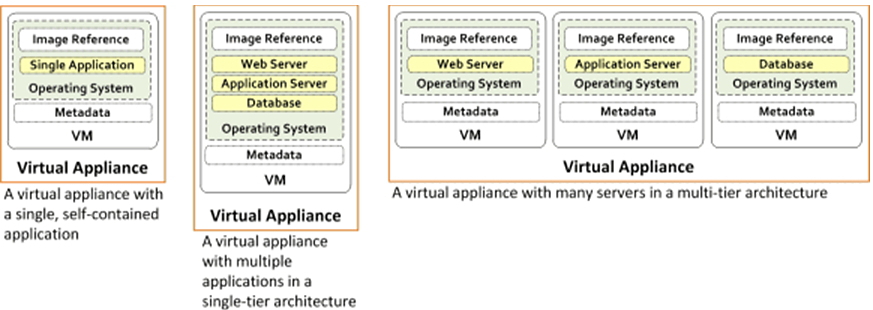

- Virtual appliancesA virtual image or virtual appliance is a pre-configured virtual machine (VM) that you can use or customize. Virtual appliances are hypervisor editions of software and represent the basic parts you use in a PureApplication system to build more complex topologies. Adding new virtual images to the PureApplication catalog enables you to deploy multiple instances of that appliance from a single virtual appliance template.Virtual appliances are portable, self-contained configurations of a software stack. They are also called virtual images and are usually built to host a single business application. The industry standard for the format of virtual appliances is the Open Virtualization Format (OVF), published by the Distributed Management Task Force (DMTF). Member companies such as IBM, VMware, Citrix, Microsoft, and Oracle all support OVF in their products.As Figure 4 shows, the OVF definition of a virtual appliance can support a single virtual machine with a single application or multiple applications in a single-tier architecture. However, OVF also supports having multiple virtual machines packaged as a single virtual appliance.

Be aware that PureApplication systems do not support this last approach, but instead provide a more flexible, reusable solution. With PureApplication systems, you can deploy multiple VMs or Docker Containers as part of a pattern, and then use links and script packages to orchestrate the interaction between them. This enables the virtual images and containers as well as the script packages to be independently reused in other patterns.

Figure 4. Virtual appliances

- Virtual appliances are important because they offer a new way of creating, distributing, and deploying software. Having an abstract layer above the hypervisor, and being able to package the software and distribute it as a pre-configured and “ready to run” unit, reduces the provisioning and deployment time of applications, which means an increase in time to value. It also improves the quality of the final deliverable. A completely configured application that does not require installation and configuration is less prone to errors.

- Virtual system patternsVirtual system patterns enable you to graphically describe a middleware topology to be built and deployed onto the cloud. Using virtual images or parts from the catalog, as well as optional script packages and add-ons, you can create, extend, and reuse middleware-based topologies. Virtual system patterns give you control over the installation, configuration, and integration of all the components necessary for your pattern to work.

- Classic virtual system patternsClassic virtual system patterns are an earlier version of virtual system patterns. As with virtual system patterns, classic virtual system patterns enable you to graphically create a logical view of a middleware topology, but there is a difference. Classic virtual system patterns are mainly provided for backwards compatibility; for example, they do not permit you to include policies for scaling, security, placement, and ifixes. This was only a feature in virtual application patterns. Fortunately, PureApplication lets you “promote” a classic virtual system pattern to a virtual system pattern.

- Virtual application patternsA virtual application pattern, also called a workload pattern, is an application centric approach for deploying applications onto the cloud. With virtual application patterns, you do not worry about the topology required to run your application, but instead specify an application (for example, an .ear file) and a set of policies that correspond to the service level agreement (SLA) you wish to achieve. PureApplication systems then transform that input into an installed, configured, and integrated middleware application environment. The system also automatically monitors application workload demand, adjusts resource allocation, and fine-tunes prioritization to meet your defined policies. Virtual application patterns address specific solutions, incorporating years of expertise and best practices.

- Choosing the right type of patternUsing virtual application patterns clearly involve the least amount of work and, in one sense, represent the ideal situation. You can create an application and let PureApplication worry about the infrastructure required to meet a specified quality of service. This promises the lowest total cost of ownership and the shortest time to value. However, not all configurations fit easily into an available virtual application pattern type. There are times when you need more granular control.Virtual system patterns and classic virtual system patterns enable you to specify the exact topology you need to support your application while still benefiting from the reusability of patterns. With virtual system patterns, you can also take advantage of features such as auto-scaling.So the general rule of thumb is this: try to leverage the optimization and convenience of virtual application patterns unless you need to control the topology and manage the environment through administrative consoles.

Understanding hypervisors, virtual machines, and containers

Using virtualization to consolidate resources, reduce space, and save on energy costs is one of the key things that make cloud computing work. IBM first developed virtualization in the 1960s to multiplex its expensive mainframe computers. In 1967, the first fully virtualized machine, the IBM S/360-67, appeared in the market, and by 1972 virtual machines had become a standard feature of all S/370 mainframes.

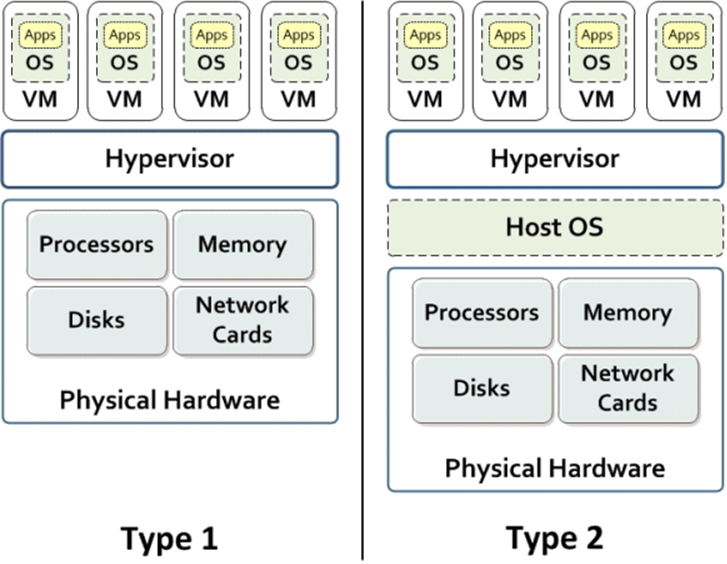

Hypervisors provide platform virtualization, which means they logically divide a physical machine into multiple virtual machines (VMs) or guests. A hypervisor, also called a virtual machine manager (VMM), controls and presents a machine’s physical resources as virtual resources to the VMs. Hypervisors enable other software (usually operating systems) to run concurrently, as if they had full access to the real machine.

As Figure 5 shows, there are two types of hypervisors: Type 1, which run directly on the physical hardware, and Type 2, which require a host operating system to run. Examples of Type 1 hypervisors include: IBM z/VM®, IBM PowerVM®, and VMWare VSphere/ESX/ESXi Server for Windows®. Others include Citrix Xen and Microsoft® Hyper-V®. Because they run on top of the hardware itself, Type 1 hypervisors are also called native or bare-metal hypervisors.

Figure 5. Types of hypervisors

Examples of Type 2 hypervisors include VMWare Workstation, VMWare Server, Kernel-Based Virtual Machine (KVM), and Oracle® VM VirtualBox. Type 2 hypervisors are also known as hosted hypervisors.

PureApplication uses collections of hypervisors arranged into cloud groups to help provision virtual machines and provide the cloud’s virtualization capabilities. In the PureApplication pattern builder, each part dropped onto the canvas generally corresponds to a virtual machine with its own operating system and usually one or more middleware components.

Linux Containers

Version 2.6.24 of the Linux kernel introduced support for Linux Containers (LXC), which are a lightweight alternative to full hardware virtualization. Instead of the platform virtualization that hypervisors provide, containers provide OS level virtualization. They enable a single machine or VM to operate multiple Linux instances (Linux containers), each isolated in its own operating environment within the OS. All containers run under the same kernel but each maintains its own process and network space.

The two main features behind Linux Containers are namespaces and control groups (cgroups). Namespaces isolate Linux resources at the process level, ensuring that a container only sees its own environment, while cgroups help control, account for, and isolate the resources that a collection of processes can use.

Docker Containers

Docker is an open source container-based technology that enables developers to build, ship, and run applications unchanged on any infrastructure, whether it is a laptop, or a VM in a cloud somewhere. Docker containers build upon the LXC low-level functions to yield a much smaller footprint and greater portability than virtual machines. Launching new containers is also much faster than launching new VMs.

In short, Docker replaces sandboxing with containerization. While an application deployed on a virtual machine comes bundled with all the necessary dependencies, such as its binaries and libraries as well as the guest OS, a Docker container requires only the application and its dependencies, and a lightweight runtime and packaging tool called the Docker Engine. As with LXC, all Docker containers share the same Host OS and kernel.

Docker also consists of a public registry for sharing applications called the Docker Hub, which has over 13,000+ (and counting) containers ready for reuse or to be used as base images for new containers.

PureApplication systems provide support for using Docker containers in patterns in PureApplication System W2500 and W1500 models and on PureApplication Software. If you have the Docker Pattern type installed and enabled in the system, you can drop Docker containers from the pattern builder as software components onto the canvas of your virtual system patterns. You can reference Docker containers from the Docker Hub or from a private Docker registry already included in the PureApplication system.

With PureApplication, you can create and deploy single- and multi-container Docker applications, and also provides multi-node orchestration of Docker containers. Within the pattern builder, you can connect containers across nodes simply by linking them. PureApplication also enables you to update container images and propagate those changes across containers, as well as scale out and in the number of containers that get created.

Chef support

Chef is an automation platform from Opscode that lets you programmatically describe how to configure and manage infrastructure. Chef treats infrastructure as code, which means you can version and test it, just like any application.

Each machine in a Chef system plays a specific role:

- A Chef node is any system that Chef manages; it can sit on a physical server, a virtual machine, or a container. All nodes run the Chef client software.

- The Chef server is the centralized store for configuration information. It is basically a large repository containing all the configuration files that clients can use. The server also organizes the data so that clients can pull it easily.

- A Chef workstation is a computer used to upload configuration changes to the Chef server. Configuration files uploaded to the Chef server are made available to be deployed to any node.

PureApplication System models W2500, W2700, W1500, and W1700 as well as PureApplication Software support Chef. The integration of PureApplication with Chef enables new possibilities, such as deploying pattern instances with nodes that can automatically update themselves based on recipes pushed to the Chef server. Also, the open Chef community has thousands of recipes that you can reuse and inject into PureApplication patterns, or you can create your own recipes and upload them to a Chef server from within PureApplication.

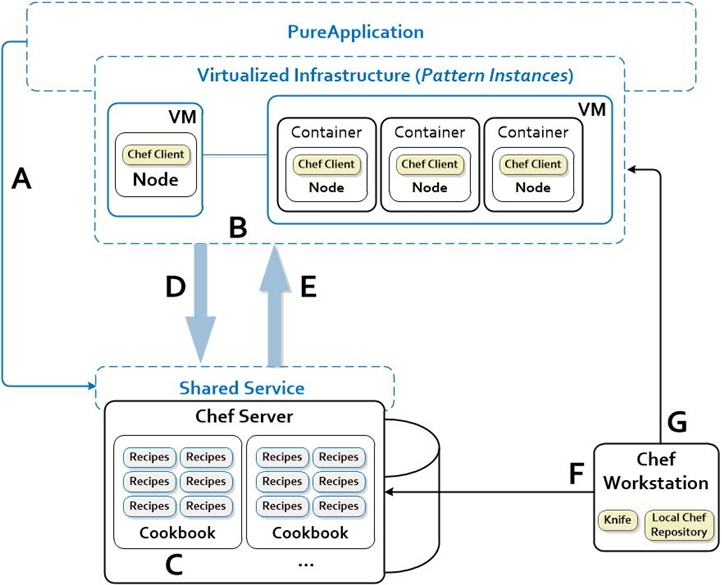

Figure 6. Chef deployments with PureApplication

Figure 6 illustrates how PureApplication supports Chef deployments:

- Within PureApplication, you can connect to a Chef Server through an external shared service, and add Chef clients as software components onto the PureApplication nodes of a virtual system pattern.

- Chef clients can be nodes that are virtual machines, or Docker containers within a virtual machine. Chef clients deployed via PureApplication also use the shared service to communicate with the Chef server.

- The Chef server contains cookbooks and recipes that describe how to define, provision, and configure application resources in Chef clients. Chef recipes are the configuration tasks or instructions that you write to install and configure resources on a node. A cookbook is a collection of related recipes.

- Chef clients periodically poll the Chef server for updates to the cookbooks or settings. If updates are available, the client pulls the content from the server. PureApplication lets you specify how often clients should poll the server for updates.

- The Chef server sends the latest versions of the cookbooks and recipes to requesting clients. Each Chef client updates itself with the configuration information it receives from the server.

- A Chef workstation is any external machine, such as your laptop, that is configured as a local Chef repository and has the Knife tool installed and configured. Knife is a command-line tool that, among other things, lets you upload cookbooks and recipes to the Chef server. You create and edit configurations and definitions on a workstation, and commit them to version control before sending them to the server. PureApplication allows you to directly add recipes to run at install time.

- The Knife tool is also regularly used to communicate with the nodes via SSH.

OpenStack

OpenStack is an open source cloud operating system that provides a set of services for controlling cloud resources in a consistent manner across the data center. OpenStack provides a common dashboard and a set of APIs (REST services) to enable portability across multiple cloud platforms.

The main OpenStack services include:

- Horizon: Dashboard

- Heat: Orchestration

- Nova: Compute

- Cinder: Block storage

- Trove: Database

- KeyStone: Identity services

- Neutron: Network

- Swift: Object storage

- Glance: Image

As part of its overall open strategy, PureApplication is progressively increasing its support for OpenStack. Currently available as a technology preview, PureApplication supports consuming and deploying Heat Orchestration Templates (HOT) in PureApplication, as well as accessing application instances via the OpenStack Services REST API. The goal is to eventually use the HOT format as the internal representation of patterns in the pattern engine.

PureApplication and Bluemix

IBM Bluemix is an implementation of IBM’s Open Cloud Architecture. Based on Cloud Foundry, Bluemix enables you to very quickly create, deploy, and manage cloud applications using a web-based interface. Bluemix runs on the SoftLayer infrastructure, and offers a good solution for quickly building front end applications on a public cloud. The integration point with PureApplication is through Bluemix’s cloud integration services, which enable you to integrate the front end with back end services running on a PureApplication system.

This offering provides a good mix where developers can quickly create applications on the front end without starting from scratch, and also leverage the enterprise-level power of a PureApplication system in a private cloud.

Conclusion

That, in a nutshell, is an overview of cloud computing options that are available, and why IBM PureApplication systems matter so much in today’s cloud world. Whether the cloud you want over you is a private, public, or hybrid cloud solution, PureApplication systems could very likely be your enabler of choice.